The 2024 GPU Technology Conference was held in San Jose, CA, from March 18th to March 21st, 2024. The conference featured a variety of topics and unveiled new hardware products during its keynote event. At the event, NVIDIA introduced two significant new products: the Blackwell B200 GPU and the GB200 “Superchips.”

The 2024 GPU Technology Conference was held in San Jose, CA, from March 18th to March 21st, 2024. The conference featured a variety of topics and unveiled new hardware products during its keynote event. At the event, NVIDIA introduced two significant new products: the Blackwell B200 GPU and the GB200 “Superchips.”

The Blackwell platform is named in honor of David Harold Blackwell, a distinguished mathematician renowned for his game theory and statistics work and the first Black scholar to be inducted into the National Academy of Sciences. This platform represents the successor to NVIDIA’s prior Hopper architecture, introduced two years ago.

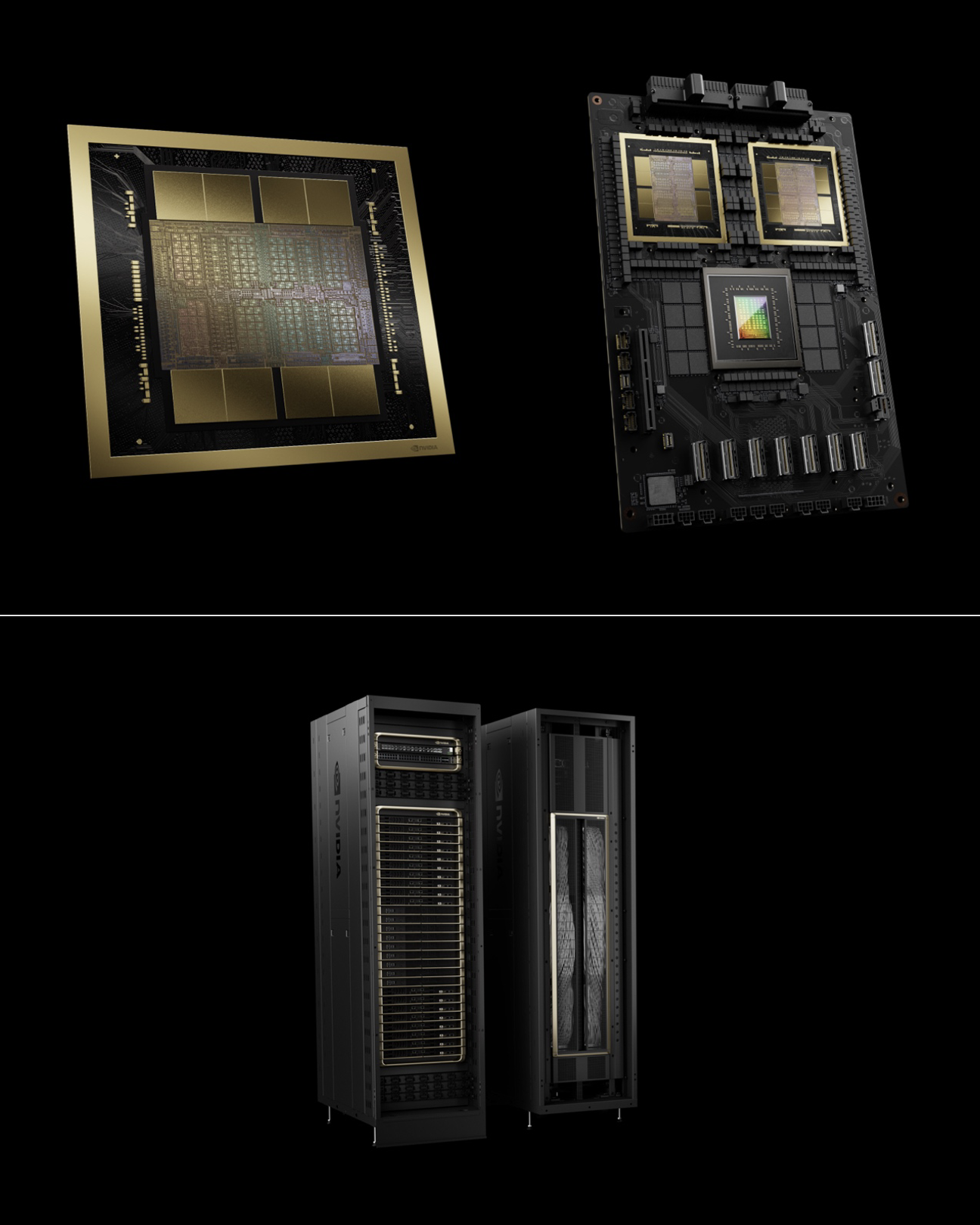

One of the new products unveiled as part of the Blackwell platform is the Blackwell B200 GPU. Per NVIDIA, the GPU harnesses 208 billion transistors to deliver up to 20 petaflops of FP4 performance, a numerical format commonly utilized in AI/ML applications to decrease Floating Point precision to 4 bits. This adjustment enhances performance and reduces memory usage and bandwidth requirements, with a slight compromise in accuracy, but leading to substantial efficiency gains. The B200 GPU features a second-generation transformer engine powered by new micro-tensor scaling support and NVIDIA’s sophisticated dynamic range management algorithms integrated into NVIDIA TensorRTTM-LLM and NeMo Megatron frameworks. This enhancement doubles compute power, bandwidth, and model size. As per NVIDIA’s announcement, the Blackwell architecture consumes approximately 25 times less energy and is 25 times more cost-effective in running generative AI large language models than Hopper.

The second new product is the GB200 Grace Blackwell, which is a massive “superchip” that connects two of those NVIDIA B200 Tensor Core GPUs to an NVIDIA Grace CPU over a 900GB/s ultra-low-power NVLink chip-to-chip interconnect, has up to 192GB of HBM3e memory and 8TB/s of memory bandwidth. These advancements enable more efficient training of large AI models that previously required massive computing power. NVIDIA offers comprehensive solutions like the NVL72 racks and DGX Superpod systems to meet the needs of cloud providers and large organizations. The GB200 NVL72 is a multi-node, liquid-cooled, rack-scale system for compute-intensive workloads that combines 36 Grace Blackwell Superchips, including 72 Blackwell GPUs and 36 Grace CPUs interconnected by fifth-generation NVLink. The platform is a single GPU with 1,440 PetaFLOPS of FP4 Tensor performance, up to 13.5TB of HBM3e memory, and 576TB/s of aggregate bandwidth. A next-gen NVLink switch is also available to integrate and enable seamless communication between up to 576 GPUs.

The new Blackwell platform is expected to be widely adopted by major cloud providers, server makers, and leading AI companies. It is supported by NVIDIA’s software stack, which includes the AI Enterprise platform.

The GB200 will also be available on NVIDIA’s DGX Cloud, a dedicated access infrastructure with tailored software for building and deploying advanced generative AI models.

In addition to the Blackwell platform, NVIDIA introduced a new cloud-based platform, NVIDIA Quantum Cloud, designed to assist researchers and developers in accessing quantum computing clusters and quantum hardware through cloud-based platforms. This platform is based on the company’s open-source CUDA-Q quantum computing platform, providing access to a microservice. The cloud service integrates with third-party quantum software to accelerate scientific exploration in chemistry, biology, and materials science.

Generative AI has sparked a wave of development activities across various sectors. NVIDIA NIM (NVIDIA Inference Microservices) forms part of NVIDIA AI Enterprise, offering a suite of optimized cloud-native microservices to streamline the deployment of generative AI models in corporate settings. This platform allows developers to easily access and integrate AI models using standard APIs, even without specialized AI knowledge. By bundling domain-specific libraries and inference engines, NIM ensures the accuracy and efficiency of AI applications for diverse scenarios. It delivers tailored solutions and enhanced performance through specialized libraries and code designed for specific domains such as language, speech, video, healthcare, and more.

NVIDIA also announced Project GR00T, a general-purpose foundation model for humanoid robots, to drive robotics and embodied AI breakthroughs. The company unveiled a new computer called Jetson Thor, designed as a computing platform for complex tasks and safe human-robot interaction. Jetson Thor features a next-generation GPU with a transformer engine for running multimodal generative AI models like GR00T. The updated Isaac platform provides new tools like Isaac Lab for reinforcement learning and OSMO for scaling robot development workflows.

The NVIDIA Omniverse continues to evolve. A Cloud was announced as a platform-as-a-service offering for developing generative AI-enabled OpenUSD (Universal Scene Description) applications. It provides a full-stack cloud environment with APIs, SDKs, and services for enterprise developers to build advanced OpenUSD-based tools and applications for 3D workflows and digital twins.